Struggling with bias in your job descriptions?

You could be turning off potential talent because of the language you use...

At Ongig, we recognize that bias in job descriptions impacts who applies to your job postings. This affects the diversity of your workforce.

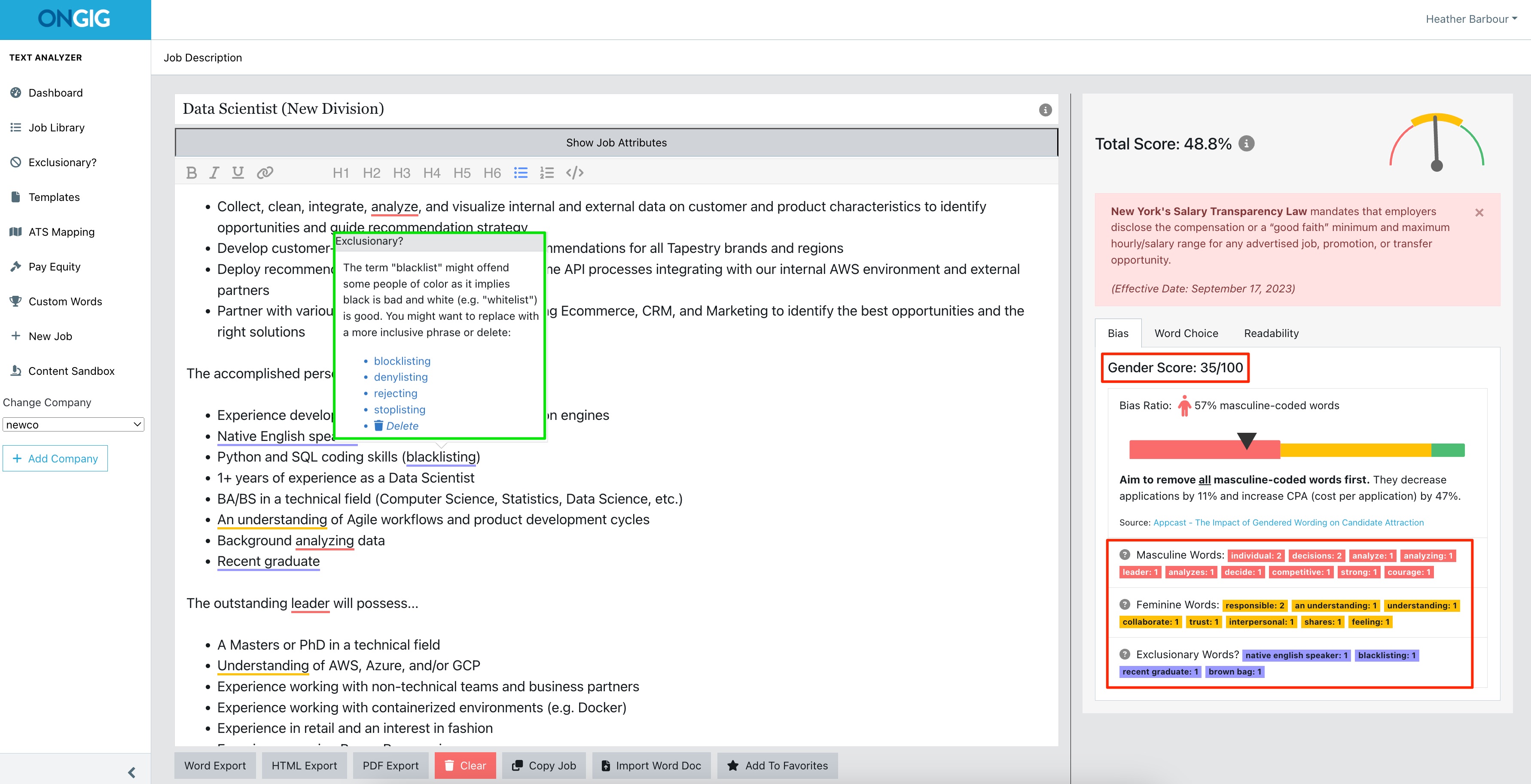

Our software helps you find (and remove) 12+ types of bias in job descriptions, ensuring your postings are welcoming to all potential candidates -- especially talent from underrepresented groups.

Here’s how Ongig’s Text Analyzer helps solve your bias problem:

- Flags biased language in job descriptions -- Our software scans your content for biased language related to gender, age, race, disability, LGBTQ+ status, ethnicity, former felons, elitism, mental health, religion, and more.

- Offers more inclusive suggestions -- The Text Analyzer flags problematic words and phrases, and gives more inclusive alternatives to ensure your job ads attract a diverse pool of candidates.

- Gives real-time feedback on inclusivity -- Our tool has real-time feedback on your job descriptions' inclusivity, helping you make immediate improvements with just a few clicks.

12+ Types of Bias Ongig Flags:

If your overall goal is to have gender-neutral, inclusive job postings, Text Analyzer can help. Our software gives each job posting a "Gender Score" based on the ratio of masculine-coded vs. feminine-coded language. Plus, phrases that might offend or exclude people from underrepresented groups are flagged in a special "Exclusionary" section. This makes it easy for your recruiters or hiring managers to find words you may want to remove or replace.

Now, here are some examples of bias we often find in job descriptions -- with tips and suggestions for improvements:

Gender Bias

Gender bias happens when job descriptions have language that favors one gender over another, e.g., "dominant leader" or "rockstar developer." This can discourage women, or people who don't identify with a particular gender, from applying. Ongig’s Text Analyzer suggests gender-neutral language to ensure inclusivity.

Examples of Gender-Neutral Language:

- Replace "salesman" with "salesperson"

- Use "bold" instead of "aggressive"

- Tip: Use Text Analyzer to swap gendered words with gender-neutral alternatives.

Age Bias

Age bias appears when job descriptions imply, for example, a preference for younger candidates through phrases like "digital native" or "energetic." Our software helps you use age-neutral language, focusing on skills, not age.

Examples of Age-Neutral Language:

- Use "person passionate about technology" instead of "digital native"

- Replace "new graduates" with "graduates"

- Tip: Emphasize experience over age

Racial Bias

Racial bias in job descriptions can exclude (or offend) people of color through phrases like "native English speaker." Ongig’s Text Analyzer flags these and suggests racially inclusive alternatives.

Examples of Racially Inclusive Language:

- Use "meet and greet" instead of "pow wow"

- Replace "nitty gritty" with "details"

- Tip: Avoid culturally specific references

Disability Bias

Disability bias shows up in job descriptions and can exclude candidates with disabilities by requiring physical tasks irrelevant to the job. Ongig’s software highlights these biases and offers inclusive alternatives.

Examples of Disability-Inclusive Language:

- Use "inputting" instead of "typing"

- Replace "walking" with "moving"

- Tip: Focus on "essential" job functions rather than physical abilities

LGBTQ+ Bias

LGBTQ bias can manifest through gendered language or assumptions about family structures. Ongig helps ensure your language is inclusive of all gender identities and sexual orientations.

Examples of LGBTQ-Inclusive Language:

- Use "team member" instead of "he" or "she"

- Use "parental leave" instead of "maternity leave"

- Tip: Avoid assumptions about family structures

Former Felons Bias

Bias against former felons appears in job descriptions that automatically exclude candidates with criminal records. Ongig’s software suggests language that offers fair opportunities for all.

Examples of Inclusive Language for Former Felons:

- Replace "convicted felon" with "former felon"

- Uae "background check" instead of "criminal history check"

- Tip: Focus on qualifications and skills rather than criminal history

Elitism Bias

Elitism bias can favor candidates from prestigious institutions or universities. Ongig’s Text Analyzer helps emphasize skills and experience over specific educational backgrounds.

Examples of Elitism-Inclusive Language:

- Replace "bachelor's degree from a top university" with "bachelor's degree"

- Use "MBA" instead of "MBA from a top business school"

- Tip: Avoid naming specific institutions unless necessary

Mental Health Bias

Mental health bias in job descriptions can exclude or offend candidates with mental health conditions. Ongig flags these biases and offers inclusive language alternatives.

Examples of Mental Health-Inclusive Language:

- Replace "sanity check" with "review" or "audit"

- Tip: Offer flexibility and support for mental health needs

- Tip: Avoid language that implies constant high energy or stress tolerance

Religion Bias

Religion bias happens when job descriptions favor certain religious practices. Ongig’s software ensures your postings respect all religious beliefs.

Examples of Religion-Inclusive Language:

- Tip: Avoid references to specific religious holidays or practices

- Tip: Emphasize respect for all religious beliefs

By flagging and suggesting alternatives for biased language, our software helps you create inclusive job postings that attract diverse talent. Request a demo today to learn how Ongig can help solve your biased job posting problems.